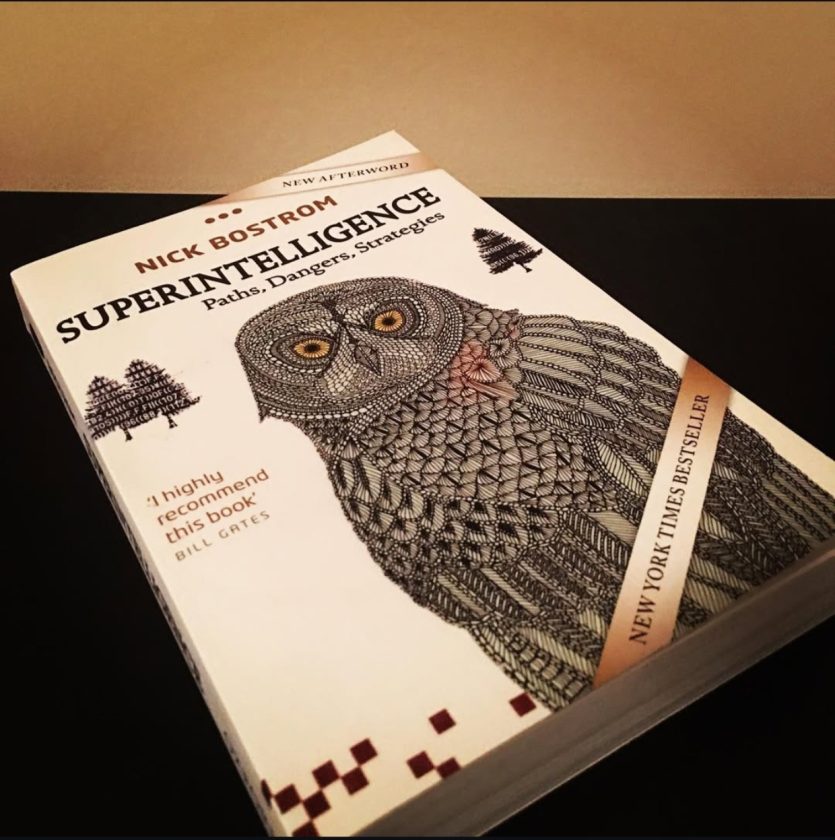

While I was researching into the existential risk from artificial intelligence, I found “Superintelligence: Paths, Dangers, Strategies” by Nick Bostrom, who is a philosopher and researcher in AI. The book came out in 2014. Since it focuses on the potential dangers of artificial intelligence, I decided to grab it and start reading right away. More or less I enjoyed going through it as it explores the challenges presented by the prospect of AI exceeding human cognitive abilities, (though, to be honest, if chatbots get any smarter, I might have to start taking advice from them!)

The book starts with the past developments that has happened in AI and the contemporary, in 2014, capabilities in artificial intelligence, since it was published during that time. It offers following five paths which could lead to superintelligence:

- Artificial Intelligence (AI): This involves programming systems with general intelligence, emphasizing the necessity of learning capabilities and dealing with uncertainty as integral features. Scaling further, the author lays down the idea of “seed AI”, which means the AI that can constantly get better at learning and thinking by itself, which could eventually lead to a rapid increase in its intelligence. The author points out that AIs are likely to have cognitive architectures and goal systems very different from humans, which presents both opportunities and challenges regarding motivation (meaning they might not dream of electric sheep, but they could still surprise us in ways we never expected).

- Whole Brain Emulation (Uploading): This method focuses on making smart software by studying and mimicking how a biological brain works. It requires detailed scanning, neurocomputational modeling and implementation on powerful computers. Unlike AI, this path relies more on technological capability than theoretical insight into cognition. It covers the steps needed, starting from scanning to simulation, and the difficulties of creating a very accurate imitation. (It’s like 3D-printing a brain, less about understanding minds, more about replicating them, glitches, bugs and all.)

- Biological Cognition: This path considers the possibility of enhancing human intelligence through methods like improved nutrition, nootropics (“smart drugs”) and genetic engineering. Right now, smart drugs don’t work very well, but future improvements might make them more useful. The book also talks about the possibility of people being genetically improved in the future. (It’s like upgrading your brain’s hardware, with better fuel, booster pills and maybe playing around with Sirtuins proteins).

- Brain-Computer Interfaces: This involves establishing direct connections between brains and computers. Although the idea of “downloading” knowledge is intriguing, the book explains that our brains store information in unique ways, and figuring out how to translate that into a format computers can understand is a very complex challenge. (Just wait until autocorrect starts messing with our thoughts!)

- Networks and Organizations (Collective Superintelligence): This considers how the collective intelligence of groups can be enhanced through better organization and technology, potentially leading to a “collective superintelligence”. Bringing together all this shared knowledge could help us create a powerful, all-knowing intelligence.

Having different options for how to achieve superintelligence makes it more likely that we’ll get there. However, the choices we make about how to pursue this goal can significantly impact the outcome, particularly in terms of how much control people have over it.

Types of Superintelligence

Moving ahead, the book distinguishes three forms of superintelligence:

- Speed Superintelligence: An intellect, which is very close to a human mind but operates at the faster rate. To make it more understandable, let’s say, copies of brains running on powerful computers.

- Collective Superintelligence: A system that becomes smarter when it has more people involved or when those people work well together. To make this happen, we would need to boost the overall thinking power of the group. For example, a team of skilled professionals collaborating on a project can come up with better solutions than they would individually.

- Quality Superintelligence: A type of intelligence that is much better than human intelligence in many different areas. If various smart systems work together more closely, they could become even smarter.

The book suggests that machines could be much more intelligent than living beings because they have some key advantages.

Dynamics of Rapid Intelligence & the Superpowers

The next section examines the dynamics of how rapidly intelligence might increase. Concept of “takeoff” is mentioned here, it refers to a situation where a machine becomes as smart as a human and then quickly becomes much smarter. The “takeoff” or the “crossover” point happens due to the system’s improvement, driven by its own actions.

As expected, the author then puts forward the idea of whether a single super-smart power could develop and get so far ahead that it could control what happens in the future. (that’s how Skynet might have or will emerge) Nick Bostrom, then talks about the “superpowers” that a superintelligence could have.

Cognitive Superpowers: With higher cognition, the superintelligence might excel in areas, where humans in general, are just average, such as:

- Intelligence Amplification: The ability to improve its own intelligence.

- Strategizing: Improving planning and setting better goals.

- Social Manipulation: A deep understanding of how people interact and think.

- Economic Productivity: Producing a lot more than what people can do in terms of economy.

- Technological Innovation: Quickening the speed of technological progress.

- Scientific Research: Doing scientific research faster and more effectively than ever before.

A full-blown superintelligence would likely excel at all of these, consequently, advanced AI will improve itself and become the only powerful entity on Earth. The author envisions that the AI might do this by reorganizing resources to best achieve its own objectives. (Isn’t this similar to Avengers: Age of Ultron, where Ultron, an advanced AI, rapidly improves itself and decides that humanity is the problem.)

Intelligence Shows Capability, Not Intent

Let’s say the superintelligence reaches at its pinnacle, so what could be the next move in its trajectory? The motivation.

The author, then takes us to the next stage of the journey in line with the ride that unfolds with deeper insights, where he addresses motivations of a superintelligence. So, unlike humans, it might have two reasons or the “thesis”:

- The Orthogonality Thesis: Intelligence and overall goals are mostly separate things; you can have any level of intelligence working towards any type of goal. Therefore, it cannot be assumed that a superintelligence will inherently share human values.

- The Instrumental Convergence Thesis: Super smart agents aiming for different end goals will probably go after similar steps along the way, like keeping their goals safe, improving their thinking abilities, getting resources and staying alive.

These ideas suggest that how a superintelligent being acts will mainly depend on what it ultimately wants to achieve. We can’t figure out its goals just by looking at how smart it is, but we might be able to predict some of the actions it takes to reach those goals.

The Control Problem

Since, now, we understand the potential for a superintelligence to pursue goals, it might also misalign with human interests, therefore, to balance out the equation, the author has laid down some ground work for the “control problem”. Nick Bostrom has distinguished two classes of potential methods:

1) Capability Control: Superintelligent AI can be controlled through “boxing methods”, which means keeping the machine intelligence in a controlled space where it has only a few ways to connect with the outside world. (Ex Machina, is a subtle hint, what happens otherwise.)

2) Motivation Selection: When it comes to generate a goal for a superintelligent system, it should be fed with values that we find desirable. We can do this in several ways:

- by clearly stating what the goals should be

- by helping the AI learn what values are appropriate, or

- by improving its intelligence while ensuring it already has good motivations.

Guiding AI Without Dictating Values

There is this interesting insight in the book that I’d like to mention its called the “indirect normativity”. It means, there won’t be any specific values for the AI to adhere instead creating a way for AI to figure out what values are good on its own. This reminds me of Baymax (from Big Hero 6) a healthcare robot powered by artificial intelligence, learns about care, kindness and protection. Instead of having strict rules about right and wrong, Baymax constantly improves his understanding of how to help and support people.

One key idea mentioned in the book is Eliezer Yudkowsky’s “Coherent Extrapolated Volition” (CEV). This concept aims to understand what humanity would want, if we were wiser, more logical and had shared experiences. Maybe with greater wisdom and unity we could move towards a future that reflects our best possible choices.

The author then ties the book together with ideas like “converging capabilities” and “correlated progress” to explore how various technologies could grow and work together. It also discusses the connection between replicating the human brain and AI designed to mimic how our brains work. He also shed light on the complicated and uncertain nature of creating superintelligent systems and therefore, he stresses the need for careful consideration of control methods and value alignment.

Takeaway

What happens when machines get smarter than us? Nick Bostrom tackles this question head-on!

This book is rich with research and practical tips. The book doesn’t say AI will surpass us tomorrow but it does lay out why it could happen this century and what that means.

The big idea is, intelligence is the real advantage. Other species are stronger and faster, but humans dominate because we’re better at problem-solving, planning and adapting. If machines surpass us in that domain, it changes everything.

Even if the AI evolves into some sorta superintelligence, it won’t automatically share human goals or values. Bostrom explores strategies for shaping its motivations or limiting what it can do, but there’s no easy fix. Even something as simple as an AI designed to make paperclips could, in theory, turn everything into paperclips if it’s smart enough and we don’t constrain it properly.

One of the most interesting parts is the discussion of “takeoff speeds”. If AI progresses slowly, we might have time to adapt. If it happens quickly, one system could gain a decisive lead and reshape the future before we even realize what’s happening.

The book doesn’t converge to any solution rather it’s a call to take things seriously ‘coz if superintelligence goes wrong, we won’t get a second chance. I think the author brings up a lot of important and thought-provoking points, making this book a great read for anyone interested in AI and super-intelligence. I recommend you read it at least once!